-

My cygwin setup

Part of changing jobs meant that I had to rebuild my Windows virtual machine. Most of which I’ve managed to get down to a science at this point, but remembering all of the little changes I’ve made to Cygwin over the years has been lost. I thought, “make a blog post” since it’ll help me remember, and possibly help others.

Ditching cygdrive

I don’t really like having to type

/cygdrive/c– I’d much rather type/c, like Git Bash does out of the box.The solution for this is to modify the

/etc/fstabfile and add this line at the end:c:/ /c fat32 binary 0 0Don’t worry about the “fat32” in there, use that even if your file system is NTFS. You can do this for arbitrary folders, too:

c:/SomeFolder /SomeFolder fat32 binary 0 0Now I can simply type

/SomeFolderinstead of/cygdrive/c/SomeFolder.Changing the home path

Cygwin’s home path is not very helpful. I choose to map it to my Windows home directory (again like Git Bash). The trick for this is to edit the file

/etc/nsswitch.confand add the following line:db_home: /%HThis sets the home to your Windows Home directory. Note that this change affects all users, so if you have multiple users on Windows, don’t hard code a particular path, instead use an environment variable like above.

Prompt

I typically set my prompt to this in my .bash_profile file:

export PS1="\[\e[00;32m\]\u\[\e[0m\]\[\e[00;37m\] \[\e[0m\]\[\e[00;33m\]\w\[\e[0m\]\[\e[00;37m\]\n\\$\[\e[0m\]"This is similar to the one Cygwin puts there by default, but does not include the machine name.

vimrc

Not exactly cygwin related, but here is a starter .vimrc file I use, I’m sure I’ll update it to include more as I remember more.

set bs=indent,eol,start set nocp set nu set tabstop=4 shiftwidth=4 expandtab syntax onIf anyone has some recommendations, leave them in the comments.

-

New Pasture

For the past eight and a half years, I’ve enjoyed many different challenges at Thycotic. From working on some tough security implementations to consulting. I’m always interested in new challenges, seeing what else lies beyond where I am now. That is why I’ve accepted employment with Higher Logic. I’ll be joining their team continuing what I do best: solving problems and doing my best to make customers happy.

I’m looking forward to it.

-

When a bool isn't a bool

Jared Parsons and I got into an interesting discussion on Twitter, and uncovered an interesting quirk in the C# compiler.

To begin, Jared already did the heavy lifting of the issue at hand with how a CLI bool can be defined in his blog post Not all “true” are created equal. Jared’s example is different than mine, here is an independent issue I created that is the same problem.

byte* data = stackalloc byte[2]; data[0] = 1; data[1] = 2; var boolData = (bool*)data; bool a = boolData[0]; bool b = boolData[1]; Console.WriteLine(a); //True Console.WriteLine(b); //True Console.WriteLine(a == b); //FalseDespited both a and b being “true” boolean values, they are not equal to one another. JavaScript has a similar issue, which is why there is the not not or coercion operator. You’d think a similar trick would work in C#:

Console.WriteLine(a == !!b);This actually, still, prints out false. Yet this prints out true:

var c = !b; var d = !c; Console.WriteLine(a == d);Seems like they are identical, no? Semantically they are, but functionally, they are not. In the former case, the C# compiler is optimizing away the double negation since it thinks it is pointless. Introducing the intermediate variables tricks the C# compiler and the double negation is no longer optimized away, thus the coercion is successful.

This seems to be a rare occurrence where the C# compiler is performing an optimization that actually alters behavior. Granted, it seems to be an extremely corner case, but I only found out about it because I actually ran into at one point. In my option, the removal of the double negation at compile time is a bug. This optimization does appear to be the C# compiler, not the JIT. The resulting IL is:

IL_001a: ldloc.2 IL_001b: ldloc.3 IL_001c: ceq IL_001e: call void [mscorlib]System.Console::WriteLine(bool)Notice that it just loads the two locals and immediately compares them, it makes no attempt to invert the value of the third local (which is “b”) in this case.

-

A FIPS primer for Developers

FIPS is a curious thing. Most developers haven’t heard of it, which to I say, “Good”. I’m going to touch very lightly on the unslayable dragon “140-1 and 140-2” part of FIPS.

Unfortunately, if you do any development for the Federal Government, a contractor, or sell your product to the government (or try to get on the GSA schedule, like 70) then you will probably come across it. Perhaps you maintain a product or a library that .NET developers use, and one of them says they get an error with you code like “This implementation is not part of the Windows Platform FIPS validated cryptographic algorithms.”

Let’s start with “what is FIPS?” A Google search will tell you it stands for “Federal Information Processing Standard”, which is standard controlled by the National Institute of Standards and Technology (NIST). That in itself isn’t very helpful, so let’s discuss the two.

NIST is an agency that is part of the Department of Commerce. Their goal is to standardize on certain procedures and references used within the United States. While seemingly boring, they standardize important things. For example, how much does a kilogram exactly weigh? This is an incredibly important value for commerce since many goods are traded and sold by the kilogram. NIST, along with the International Bureau of Weights and Measures, standardize this value within the United States to enable commerce. NIST also standardizes many other things, from how taximeters to the emerging hydrogen fuel refilling stations.

NIST also standardizes how government agencies store and protect data. This ensures each agency has a consistent approach to secure data storage. This is known as the Federal Information Processing Standard, or FIPS. While FIPS touches on things that are not related to security and communication, such as FIPS 10-4 which standardizes Country Codes. However the one subject that eclipses all of the others in FIPS is data protection. From encryption (both symmetric and asymmetric) to hashing. FIPS attempts to standardize security procedures, data storage and communication, and maintain a set of approved algorithms.

FIPS 140 encompasses requirements for cryptographic “modules”. FIPS refers to them as modules and not algorithms because a “module” may be an actual piece of hardware, or a pure-software implementation.

There are two key things to distinguish in the context of FIPS 140: validated and approved functions.

An approved function is a function, or algorithm, which FIPS 140-2 accepts, as documented in annex A. This means that for certain applications, certain algorithms must be used as applicable to FIPS 140-2. In the case of Symmetric Encryption, approved algorithms are AES, 3DES, and Skipjack. Each of these algorithms have their own NIST publication. AES’s for example, is NIST Special Publication 197 and 3DES is Special Publication 800-67.

read more... -

On Pair Programming

I’ll take a slight detour from my usual “fact” based blog posts and focus on a matter of opinion, one that I have swung like a pendulum on myself, which is pair programming. Pair programming is something I’ve been using it for about 8 years now, and I think it is time I finally wrote some thoughts on it. My experience with pair programming has been “pair program 100% all the time”. If there is work to be done, we tried to pair program with it.

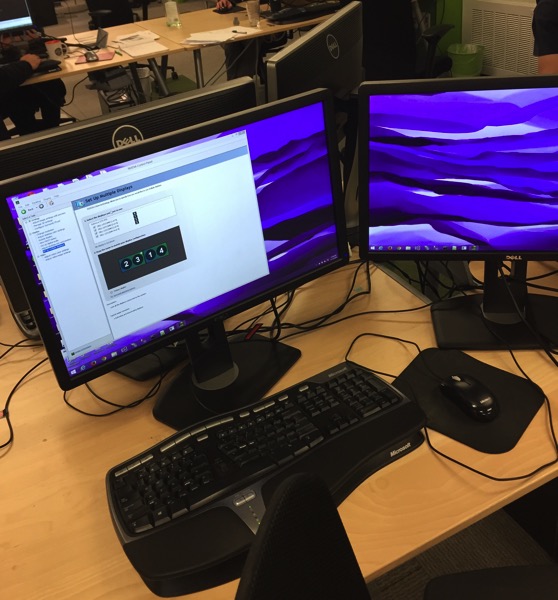

For those unfamiliar with pair programming, the gist of it is two developers, one computer. You have two (or four, six, etc.) monitors connected to a single computer, with two keyboards, two mice, and two developers work together to accomplish a goal.

Naturally, this might seem a bit confusing at first, but the origins hark back to the days of Extreme Programming – XP for short – something that I was first exposed to in 2006. The two developers work together, writing the same code, helping each other. One person is doing the “driving” of the computer, while the other is watching for mistakes, providing input, or mentoring. Eventually they switch. Really, there are books on the subject of how to do pair programming, but that’s the single paragraph version of it. The uninitiated might first think, “Isn’t pair programming a tremendous waste of time?” Pair programming promises to make up on the additional use of a developer by offsetting it with increased quality. The argument is persuasive – bugs and defects are often the most expensive things to fix. If they can be limited during the development phase, and better quality and architecture is a result, then you end up with a net positive. You also have free knowledge transfer between team members.

Deeper though, there are too many problems with pair programming. I won’t argue that code quality usually ends up being better, and some bugs get caught. However the cost associated with the gains is too steep. I could just as easily require three people to code review work. That would also result in better quality. So increased quality cannot be the only deciding factor as to whether or not Pair Programming is successful for a team.

When I was first introduced to pair programming, I was a junior but capable developer. Pair programming really worked well in my favor. Rather than be thrown into a code base I had absolutely no familiarity with, I was working with people that had experience with the code, and working with a senior team member. As we were working together, I was not only seeing the code produced, but his reasoning and thought process behind what was being accomplished.

At the time, I didn’t think much of how the senior developer felt about pair programming. Eight years later, I have a pretty good idea – it’s hard. Being in a constant mentoring mode while being driven by hard deadlines is a constant act of balancing, one that after doing years and years of all the time, takes its toll. This “senior-junior” pair really only benefits a single person – the junior. This however detracts from the senior developer’s sense of accomplishment, which is something most senior developers thrive on. The senior developer’s goal is not to educate the junior person, it is to get things done. At worse, this can attribute to burn out to that senior developer. The list of potential issues goes on, from issues of engagement to personality.

I’m not writing this to shoot pair programming down. I just dislike dogma. There is a right time and a wrong time for everything, and applying a principle before a situation has been fully understood is asinine. Pair programming, like anything, should be understood if it is applicable in a given situation. Applying ad-hoc pair programming I think can be powerful. Rather than doing pair programming all of the time, asking a colleague for a few minutes or an hour of their time to work through something difficult is a totally normal thing to do.

I would even go so far any take it a step further and replace pair programming with actual mentoring and coaching time if you need to get a junior up to speed. If structured mentoring is put in place, the junior developer gets better results. The senior developer is focused on that, so it doesn’t detract from their sense of accomplishment, rather it contributes to it.