-

Public Key Pinning

I’ve added public key pinning, or HPKP for short, to my site. I initially wanted to wait until my new blog launched, but I was rather anxious to play with it, so I pulled the trigger anyway.

So what is public key pinning, anyway? In short, it’s an HTTP header that instructs user agents (browsers) the exact public keys it should be using with a particular domain, and remembers those public keys which are specified for a period of time. The purpose of this is that if an active attacker were to forge an X509 certificate, even one that was issued by a legitimate certificate authority, the forged one would be rejected since it was not previously pinned.

The HPKP header (Public-Key-Pins is the name of the HTTP header) looks like this (line breaks added for readability):

'pin-sha256="7qVfhXJFRlcy/9VpKFxHBuFzvQZSqajgfRwvsdx1oG8="; pin-sha256="/sMEqQowto9yX5BozHLPdnciJkhDiL5+Ug0uil3DkUM="; max-age=5184000;'There are a few things going on here. Each “pin-sha256” is simply a SHA256 digest of the public key, base64 encoded. The SHA256 digest can be calculated from the full private key like so:

openssl rsa -in mykey.key -outform der -pubout | openssl dgst -sha256 -binary | base64The digest for the current certificate on my site is

/sMEqQowto9yX5BozHLPdnciJkhDiL5+Ug0uil3DkUM=. There is also a specified “max-age” value, which tells the browser how long it should retain the “memory” of the pinned key, in seconds. Currently, for this site it is set to two months. Browsers also support the SHA1 digest to pin a key, which would then mean you specify it as “pin-sha1” if you are using a SHA1 digest.HPKP is a “trust on first use” security feature, meaning that the browser has no way to validate that what is set in the headers is actually correct the first time it encounters the pinned keys. When the user agent sees the site for the first time, it pins those keys. Every time the user agent connects to the server again, it re-evaluates the HPKP header. This lets you add new public keys, or remove expired / revoked ones. It also allows you to set the max-age to zero, which means the user agent should remove the pinned keys. Note that a user agent will only pin the keys if the HTTPS certificate is “valid”. Like HSTS, if the certificate is not trusted, the public key will not be pinned.

There is a potential issue though if you only pin one key: replacing a pinned key can potentially lock someone out of the site for a very long time. Let’s say that the public key is pinned for 2 months, and someone visits the site, thus the user agent records the pinned keys. One month later, you need to replace the certificate because the certificate was lost or compromised, and you update the Public-Key-Pins header accordingly. However, the site will not load for that person. As soon as the TLS session is established, the browser notes that the new certificate does not match what as pinned, and immediately aborts the connection. It can’t evaluate the new header because it treated the TLS session as invalid, and never even made an HTTP request to the server. That person will not be able to load the site for another month.

This is why HPKP requires a “backup” key, which is why I have two pinned keys. A backup key is an offline key that is not used in production, so that if the current one does need to be replaced; you can use the back up and create a new certificate with that one. This will allow user agents to continue to load the site, and update the HPKP values accordingly. You would then remove the revoked certificate and add another backup to the header. A backup key is so important that user agents mandate it. You cannot pin a “single” public key. There must be a second that is assumed to be a backup. If the backup actually matches any certificate in the TLS session’s certificate chain, the user agent ignores it and assumes it cannot possibly be a backup since it is in production.

I used OpenSSL to generate a new public / private key pair:

openssl genrsa -out backupkey.key 2048 openssl rsa -in backupkey.key -outform der -pubout | openssl dgst -sha256 -binary | base64I can then use that backup key to create a new CSR should my current certificate need to be replaced. Using Chrome’s

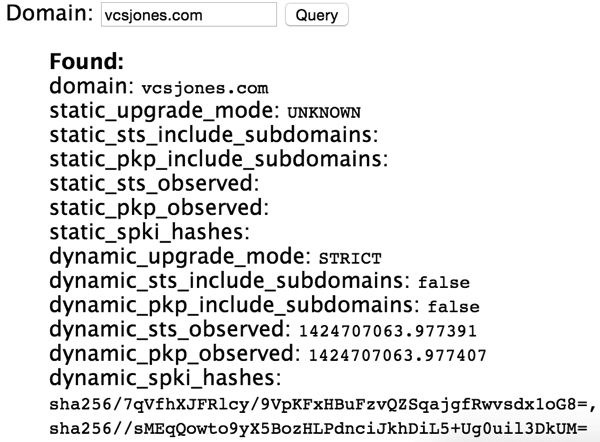

chrome://net-internals#hstspage, I can verify that Chrome is indeed pinning my public keys.

Dynamic public key pinning is relatively new, only Chrome 38+ and Firefox 35+ supports it. It also presents much more risk that Strict-Transport-Security since loss of operating keys makes the site unloadable. However I do expect that this will become a good option for site operators that must strictly ensure their sites operate safely.

-

Review: Bulletproof SSL and TLS by Ivan Ristic

I’m not one to write book reviews very often, in fact that you will see this is my first one on my blog. However one book has caught my attention, and that is “Bulletproof SSL and TLS” by Ivan Ristic. I bought this book with my own money, and liked the book enough to write a review of it. After giving this book a thorough read, and some rereading (I’ll explain later) I am left with very good impressions.

This book can be appealing or discouraging in some ways, depending on what you want. I had a hard time figuring out who the right audience for this book is because the assumed knowledge varies greatly from chapter to chapter. Part of this book reads like a clear instruction manual on how to do SSL and TLS right. The other part of this book is focused a bit more on the guts of TLS and its history. The latter topic requires a bit of background in general security and basic concepts in cryptography. While Ristic does a good job trying to explain some cryptographic primitives, I could see certain parts of this book difficult to understand for those not versed in those subjects. I think this is especially noticeable in Chapter 7 on Protocol Attacks.

Other chapters, like 13-16 are clear, well written guides on how best to configure a web server for TLS. These chapters are especially good because it helps make informed decisions about what you are actually doing, and why you may or may not, want to do certain configurations. Too often I see articles written online that are blindly followed, and people aren’t making decisions based on their needs. He does a good job explaining many of these things, such as what protocols you want to support (and why), what cipher suites you should support (and why), and other subjects. This is in contrast to websites with very ridged instructions on protocol and cipher suite selection that may not be optimal for the reader, which just end up getting copied and pasted by the reader. This is a much more refreshing take on these subjects.

However I would read the book cover-to-cover if you are interested in these subjects. Some things might not be extremely clear, but it’s enough to get a big picture view of what is going on in the SSL / TLS landscape.

Another aspect of this book that I really enjoyed was how up-to-date it was. I opted to get a digital PDF copy of the book during the POODLE promotion week. It’s very surprising to be reading about a vulnerably that occurred in October 2014 in October 2014. That’s practically unheard of with most books and publishers, and this book really stands out because of it. This is why I ended up rereading parts of the book – it has very up-to-date material.

While I am reluctant to consider myself an expert in anything, I did my best to configure my own server’s TLS before reading this book (enough to be happy with the protocols, cipher suites, and certificate chain), but by the time I finished this book I had made a few changes to my own server’s configuration, such as fine-tuning session cache.

My criticisms are weak ones – this is a very good book. Any person that deals with SSL and TLS on any level should probably read this, or even those that are just curious.

-

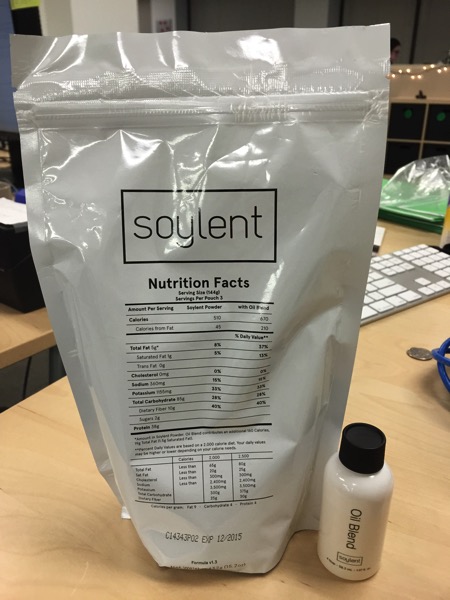

Impressions of Soylent

A little over a week ago, I got my first shipment of Soylent after patiently waiting for 5 months. I decided that I was going to try eating it every day for lunch, only to see if I could figure out what all of the hype was. For those that don’t know what Soylent is, Soylent is a powdered “food” that you mix with water and an oil additive, which can supposedly replace meals, or even your entire diet. Indeed, there are people that claim they are living entirely off of Soylent.

The idea is compelling for a couple of reasons. Firstly, if it lives up to its claim, it is cost effective. It works out to just over $4 a meal for me. If I had reoccurring shipments, it works out $3.33 a meal. Packing lunch every evening is a bit of a hassle, and even when I do I’m always in a rush in the morning and leave it in the fridge. Lunch in Washington DC can get expensive. A simple sandwich will run you about $6. Over a working week, that adds up quite a bit. Halving those costs seemed appealing, and I wouldn’t have to do any preparation, I can just leave a bag of Soylent at work. Secondly, it supposedly provides balanced nutrition. It’s tempting to eat junk food for lunch and convince yourself it isn’t that bad.

Preparing it is dead simple. You mix water and Soylent together in a 2:1 ratio by volume, and add the oil mixture to it. The powder itself provides most of the nutrition and mass, the oil adds Omega-3. The oil is based on algal, or algae, which means Soylent happens to be vegan, too.

Soylent provides a pitcher for making large quantities of Soylent at once, which isn’t exactly what I wanted. The life of “prepared” Soylent is about 2 days when refrigerated. I wanted to keep it in its powdered form as long as I can, and I don’t want to occupy too much space in the work fridge. Soylent instructs you to mix it together and shake and stir it a bit, but I found the texture to be very grainy and clumpy at first. I then opted to get a Jaxx shaker for a few dollars. Preparing the Soylent in one of these fixes the clumpy-ness and it comes out pretty smooth. For taste, I think it is quite pleasant. I was rather surprised, it has a vanilla and oats taste. Since most of the mass and carbohydrates of Soylent is powdered oats, it makes sense. It wasn’t too sweet or undersweet.

It took me a day or two to figure out how to get “full” from it. Soylent is pretty much a liquid, and I never felt satiated by it at first. The hunger just eventually evaporated after 30 minutes to an hour of eating. One thing that did help with that is splitting the meal. I’ll eat one half, wait and hour or so, then eat the second half. That helps me feel fuller, and it keeps me feeling ull for longer.

Some have heard that Soylent makes some people rather gassy. I myself haven’t experienced this, however I’m not doing a full diet of Soylent. Soylent also recommends a few over the counter enzymes to help with digestion if it is a problem, but I haven’t had the need.

I decided to sign up for a reoccurring subscription since this seems to be working for me. I still continue to enjoy food, but I’m rather happy with Soylent for lunches. I don’t know if I would recommend it if you think it is weird — it is weird. But if the benefits seem appealing to you and it’s something you want to try, go for it.

-

Content-Security-Policy Nonces in ASP.NET and OWIN, Take 2

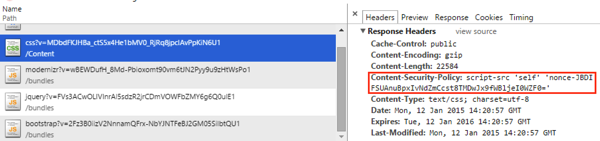

I last wrote about using nonces in content security policies with ASP.NET and OWIN. I’ve learned a few things since that should help a little bit.

First, in my previous example, I used a bit of a shotgun approach by applying the CSP header in OWIN’s middleware. This worked effectively, but it had one downside: it added the CSP header to everything, including non-markup content like .JPGs and .PNGs. While having the CSP header for these doesn’t hurt anything, it does add at minimum 28 bytes every time the content is served.

Since we are using MVC, it makes sense to move this functionality into an ActionFilter and registering it as a global filter. Here is the action filter:

public sealed class NonceFilter : IActionFilter { public void OnActionExecuting(ActionExecutingContext filterContext) { var context = filterContext.HttpContext.GetOwinContext(); var rng = new RNGCryptoServiceProvider(); var nonceBytes = new byte[32]; rng.GetBytes(nonceBytes); var nonce = Convert.ToBase64String(nonceBytes); context.Set("ScriptNonce", nonce); context.Response.Headers.Add("Content-Security-Policy", new[] { string.Format("script-src 'self' 'nonce-{0}'", nonce) }); } public void OnActionExecuted(ActionExecutedContext filterContext) { } }Then we can remove our middleware from OWIN. Finally, we add it to our global filters list:

public static void RegisterGlobalFilters(GlobalFilterCollection filters) { filters.Add(new NonceFilter()); /* others omitted */ }The

NonceHelperused for rendering the nonce in script elements doesn’t need to change.This adds the Content-Security-Policy header to MVC responses, but not static content like CSS or JPG files. This also has the added benefit of working in projects that don’t use OWIN at all.

This does put more burden on putting Content-Security-Policy in other places though, such as static HTML files, or any other places where the browser is interpreting markup.

-

Content-Security-Policy Nonces in ASP.NET and OWIN

I’ve been messing around with the latest Content-Security-Policy support in Chrome, and wanted to try using the nonce feature for whitelisting inline scripts. It looks like it will be less of a pain than hashes, and simplify the case of dynamic JavaScript.

The basic theory is this: when I send my Content-Security-Policy header, I include a randomly generated nonce, like this:

Content-Security-Policy: "script-src 'self' 'nonce-[random nonce]'"Where [random nonce] is a securly generated nonce. This nonce will be unique for every single response from the server.

On the web content side of things, where I have a

<script>tag, I include an attribute called “nonce” with the same value.<script nonce="[random nonce from header]" type="text/javascript"> //my script body </script>When the browser executes the inline JavaScript block, it checks that the nonce attribute matches what was sent in the header.

This prevents an attacker from injecting scripts into a page with XSS. A common scenario might be the attacker registers a username

<script>/*do bad things in JS*/</script>. If this were something like a chat application that lists every active user, the attacker is able to execute JavaScript in another user’s session. Ouch.However with the nonce, the attacker cannot inject script tags since the nonce is changing on every request. Instead, the attacker will get a Content Security Policy error:

Refused to execute inline script because it violates the following Content Security Policy directive: “script-src ‘self’”. Either the ‘unsafe-inline’ keyword, a hash (‘sha256-…’), or a nonce (‘nonce-…’) is required to enable inline execution.

How do we accomplish this in ASP.NET MVC? Using the OWIN middleware, we can inject the header pretty easily:

public void Configuration(IAppBuilder app) { app.Use((context, next) => { var rng = new RNGCryptoServiceProvider(); var nonceBytes = new byte[32]; rng.GetBytes(nonceBytes); var nonce = Convert.ToBase64String(nonceBytes); context.Set("ScriptNonce", nonce); context.Response.Headers.Add("Content-Security-Policy", new[] {string.Format("script-src 'self' 'nonce-{0}'", nonce)}); return next(); }); //Other configuration... }You might have a more preferred way of doing this in OWIN, such as using a container to resolve a middleware implementation, but for simplicity’s sake, we’ll go with this. This does two things. First, it securely generates a 32 byte random nonce. There are no specific guidelines on how big a nonce should be, but a 256-bit nonce is big enough that it is next to impossible to guess (assuming the RNG isn’t broken), and small enough that it isn’t adding significant weight to the response size. Realistically, a nonce could even be 32 or 64 bits and still provide adequate security. It then adds this nonce to the header. Secondly, it adds this nonce into the OWIN context so that we can use it elsewhere.

We then want to add this generated nonce into the response body. We can build a simple HTML helper to use this in our razor views:

public static class NonceHelper { public static IHtmlString ScriptNonce(this HtmlHelper helper) { var owinContext = helper.ViewContext.HttpContext.GetOwinContext(); return new HtmlString(owinContext.Get<string>("ScriptNonce")); } }Then we can use this helper in our views:

<script type="text/javascript" nonce="@Html.ScriptNonce()"> //my script body </script>The rendered result is something like this:

<script type="text/javascript" nonce="WpvQQK0FO/ZAljsQDGMLEgi2hrvIBVPQNak9zIWqRZE="> //my script body </script>This is a simple approach that works well. When Content Security Policy Level 2 gets broader adoption, I think this will be another effective tool web developers can use to mitigate XSS attacks.

Nonces don’t help you is if the attacker can influence the body of a script element, where hashes can protect against that. However hashes have their own shortcomings, such as bloat in HTTP headers and being a little more fragile. Nonces however address the issue of a script that is truly dynamic, such as those that contain CSRF tokens which are also generated per-request.