-

IIS and TLS

I recently made the claim that you should not use IIS to terminate HTTPS and instead recommend using a reverse proxy like HAProxy or NGINX (ARR does not count since it uses IIS).

If you are going to run your App on IIS for God’s sake don’t terminate SSL with IIS itself. Use a reverse proxy. (Nginx, HAProxy). - vcsjones

I thought I should add a little more substance to that claim, and why I would recommend decoupling HTTPS from IIS.

IIS itself does not terminate SSL or TLS. This happens somewhere else in windows, notably http.sys and is handled by a component of Windows called SChannel. IIS’s ability to terminate HTTPS is governed by what SChannel can, and cannot, do.

The TLS landscape has been moving very quickly lately, and we’re finding more and more problems with it. As these problems arise, we need to react to them quickly. Our ability to react is limited by the options that we have, and what TLS can do for us.

SChannel limits our ability to react in three major ways.

The first being that the best available cipher suites to us today are still not good enough on Windows (as of Windows Server 2012 R2). The two big omissions are

TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256andTLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384. These two cipher suites are usually recommended as being one of the first cipher suites that you offer. Oddly, there are some variants that are fairly close to this cipher suite. The alternatives areTLS_DHE_RSA_WITH_AES_128_GCM_SHA256orTLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256(and their AES256 / SHA384 variants). Both have their own issues. The former uses a slower, larger ephemeral key, and the latter uses CBC instead of an AEAD cipher like GCM. Stranger, ECDHE and AES-GCM can co-exist, but only if you use an ECDSA certificate, so the cipher suiteTLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256does work.This is a bit frustrating. Microsoft clearly has all of the pieces to make

TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256a real thing. It can do ECDHE, it can do RSA, and it can do AES-GCM. Why they didn’t put those pieces together to make a highly desirable cipher suite, I don’t know.The second issue is even though that cipher suites may be lacking, I’m sure people at Microsoft know about it, yet there hasn’t been an update to support it despite the cipher suite being in wide adoption for quite some time now. SChannel just doesn’t get regular updates for new things. Most things like new versions of TLS have been limited to newer versions of Windows. Even when there was an update in May 2015 to add new cipher suites, ECDHE+RSA+AESGCM wasn’t on the list. The KB for the update contains the details.

The final issue is even if SChannel does have all of the components you want, configuring it is annoying at best, and impossible at worst. SChannel handles all TLS on Windows, and SChannel is what is configured. If say, you wanted to disable TLS 1.0 in IIS, you would configure SChannel to do so. However by doing that, you are also configuring any other component on Windows that relies on SChannel, such as Remote Desktop, SQL Server, Exchange, etc. You cannot configure IIS independently. You cannot turn off TLS 1.0 if you have SQL 2008 R2 running and you want to use TLS to SQL server for TCP connections. SQL Server 2012 and 2014 require updates to add TLS 1.2. Even then, I don’t consider it desirable that IIS just cannot be configured by itself for what it supports in regard to TLS.

Those are my arguments against terminating HTTPS with IIS. I would instead recommend using NGINX, HAProxy, Squid, etc. to terminate HTTPS. All of these receive updates to their TLS stack. Given that most of them are open source, you can also readily re-compile them with new versions of OpenSSL to add new features, such as CHACHA20+POLY1305.

-

Using Chocolatey with One Get and HTTPS

Having rebuilt my Windows 10 environment again, it was time to start installing stuff I needed to use. I thought I should start making this scriptable, and I know Windows 10 has this fancy new package manager called One Get, so I thought I would give it a try.

I found a blog post from Scott Hanselman on setting up One Get with Chocolatey. Having set up Chocolatey, I ran Get-PackageSource to check that it was there, and this was the output:

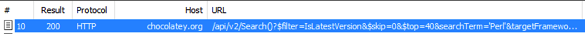

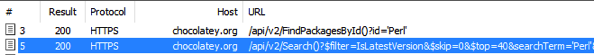

Name ProviderName IsTrusted IsRegistered IsValidated Location ---- ------------ --------- ------------ ----------- -------- PSGallery PowerShellGet False True False https://www.powershellgallery.com/api/v2/ chocolatey Chocolatey False True True http://chocolatey.org/api/v2/All seemed OK, but I noticed that the Chocolatey feed location was not HTTPS. This was obviously a bit concerning. I fired up Fiddler to check if it was actually doing HTTP queries, and yes, it was.

After checking out Chocolatey, it does appear that it supported HTTPS. After doing a bit of tinkering, I found the proper cmdlets to update the location.

Set-PackageSource -Name chocolatey -NewLocation https://chocolatey.org/api/v2/ -ForceAfter that, I re-ran my query, and queries were done over HTTPS now.

-

Experimenting with WebP

A few years ago, Google put out the WebP image format. I won’t dive in to the merits of WebP, Google does a good job of that.

For now, I wanted to focus on how I could support it for my website. The thinking that if I am happy with the results here then I can use it in other more useful ways. The trick with WebP is it isn’t supported by all browsers, so a flat “convert all images to WebP” approach wasn’t going to work.

Enter the Accept request header. When a browser makes a request, it includes this header to indicate to the server what the browser is capable of handling, and the preference for the content. Chrome’s Accept header currently looks like this:

text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8Chrome explicitly indicates that it is willing to process WebP. We can use this to conditionally rewrite what file is returned by the server.

The plan was to process all image uploads and append “.webp” to the file. So, foo.png becomes foo.png.webp. We’ll see why in a bit. The other constraint is I don’t want to do this for all images. Images that are part of WordPress itself such as themes will be left alone, for now.

Processing the images was pretty straightforward. I installed the webp package then processed all of the images in my upload directory. For now we’ll focus on just PNG files, but adapting this to JPEGs is easy.

find . -name '*.png' | (while read file; do cwebp -lossless $file -o $file.webp; done)Note: This is a bit of a tacky way to do this. I’m aware there are probably issues with this script if the path contains a space, but that is something I didn’t have to worry about.

This converts existing images, and using some WordPress magic I configured it to run cwebp when new image assets are uploaded.

Now that we have side-by-side WebP images, I configured NGINX to conditionally serve the WebP image if the browser supports it.

map $http_accept $webpext { default ""; "~*image/webp" ".webp"; }This goes in the server section of NGINX configuration. It defines a new variable called

$webpextby examining the$http_acceptvariable, which NGINX sets from the request header. If the$http_acceptvariable contains “image/webp”, then the$webpextvariable will be set to .webp, otherwise it is an empty string.Later in the NGINX configuration, I added this:

location ~* \.(?:png|jpg|jpeg)$ { add_header Vary Accept; try_files $uri$webpext $uri =404; #rest omitted for brevity }NGINX’s

try_filesis clever. For PNG, JPG, and JPEG files, we try and find a file that is the URI plus the webpext variable. The webpext variable is empty if the browser doesn’t support it, otherwise it’s .webp. If the file doesn’t exist, it moves on to the original. Lastly, it returns a 404 if neither of those worked. NGINX will automatically handle the content type for you.If you are using a CDN like CloudFront, you’ll want to configure it to vary the cache based on the Accept header, otherwise it will serve WebP images to browsers that don’t support it if the CDN’s cache is primed by a browser that does support WebP.

So far, I’m pleased with the WebP results in lossless compression. The images are smaller in a non-trivial way. I ran all the images though

pngcrush -bruteandcwebp -losslessand compared the results. The average difference between the crushed PNG and WebP is 15,872.77 bytes (WebP being smaller). The maximum is 820,462. The maximum was 164,335 bytes, and the least was 1,363 bytes. Even the smallest difference was a whole kilobyte. That doesn’t seem like much, but its a huge difference if you are trying to maximize the use of every byte of bandwidth. Since non of the values were negative, WebP outperformed pngcrush on all 79 images.These figures are by no means conclusive, it’s a very small sample of data, but it’s very encouraging.

-

Site Changes

Eventually I’ll blog about them in detail, but I’ve made a few changes to my site.

First, I turned on support for HTTP/2. Secondly, I added support for the

CHACHA20_POLY1305cipher suite. Third, if your browser supports it, images will be served in the WebP format. Currently the only browser that does is Chrome.My blog tends to be a vetting process for adopting things. If all of these things go well, then I can start recommending them in non-trivial projects.

-

Exchange Issues on El Capitan with Office 365

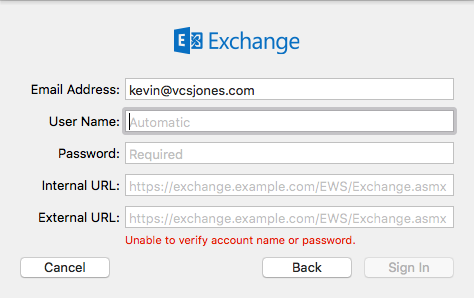

I just upgraded to El Capitan, and for the most part I haven’t run into any issues, except my Exchange accounts seemed wrong now. It was asking for my password, and it changed the username from kevin@thedomain.com to just “kevin”.

To fix this, I just removed the Exchange account and thought to re-add it. However, it would not accept my username and password.

The error was always “Unable to verify account name or password”, and I knew the password and username were correct.

Completing the dialog with the Internal and External URL worked, however. For Office 365, setting the URLs to “https://outlook.office365.com/EWS/Exchange.asmx” and completing the rest, and using my full email address for the username worked.